Evaluation of YBA MAGAR – YBA Surpasses Industry Benchmarks and Outperforms Leading Tech Giants

November 2025

Executive Summary

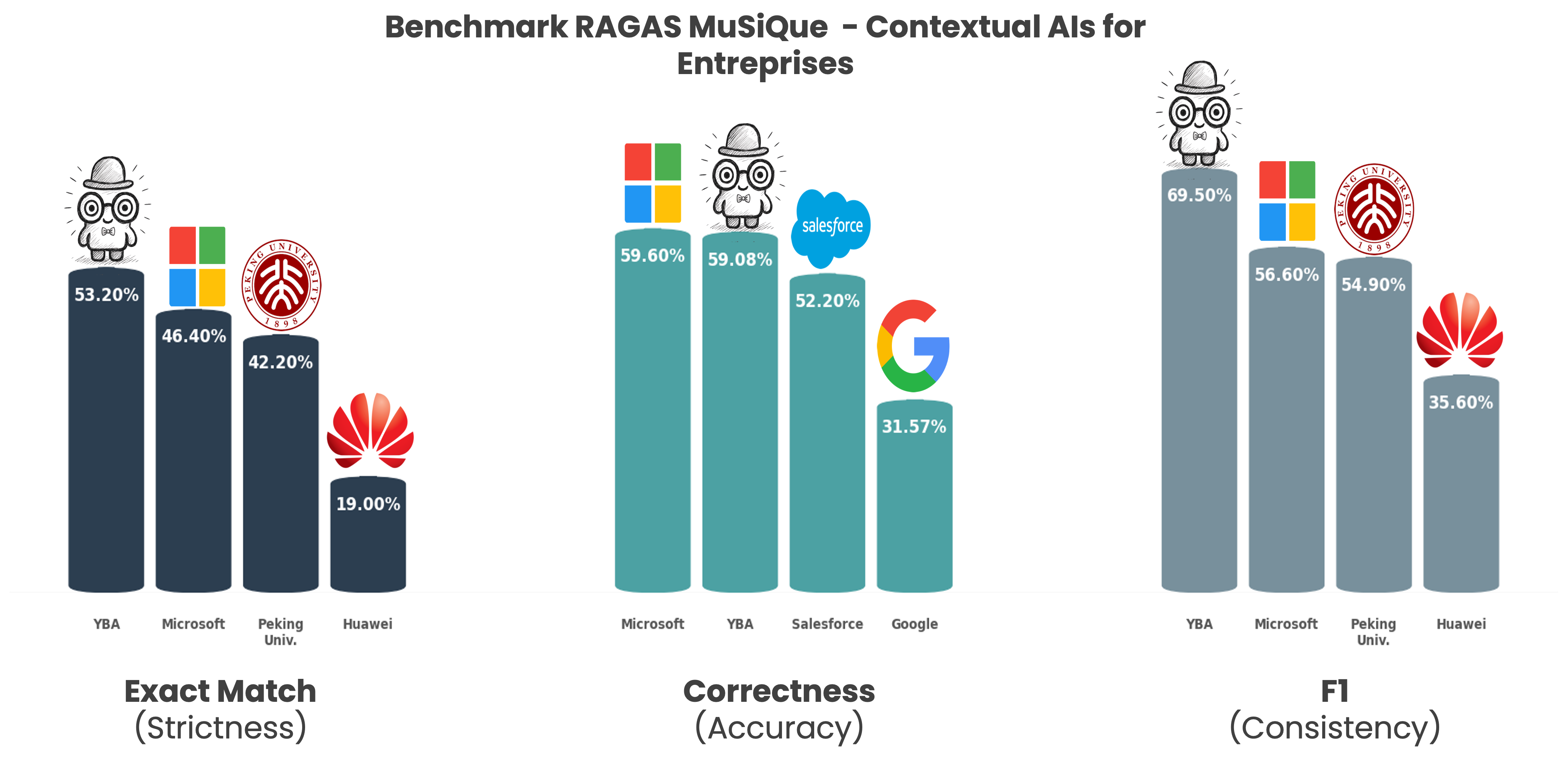

YBA MAGAR achieves 59.08% answer correctness on the MuSiQue benchmark—matching Microsoft’s fine-tuned model and outperforming Salesforce, Google, Huawei, and Peking University. With the highest Exact Match (53.2%) and F1 (69.5%) scores, MAGAR sets a new standard in retrieval-augmented reasoning for enterprise AI.

Abstract

We introduce MAGAR (Multi-Agent Graph-Augmented RAG), a retrieval-augmented generation framework that combines graph-based retrieval with multi-agent orchestration to support robust, multi-step in-context reasoning over company knowledge. To assess MAGAR’s generality for multi-hop reasoning, we benchmarked it on MuSiQue, a public multi-document multi-hop question-answering dataset. This report presents the evaluation results drawn from our experiment materials, explains the evaluation protocol, and provides an appendix with reproducibility notes. All numeric results in this report are taken from the supplied evaluation materials and have not been altered. These results confirm MAGAR’s effectiveness in retrieval-augmented reasoning and position YBA among the leaders in multi-hop question answering performance.

Comparison results

Figure : Comparative performance of YBA RAG (MAGAR) against leading In Context systems

About YBA.ai

YBA.ai builds in-context agents that automate knowledge work for go-to-market teams. Our MAGAR (Multi-Agent Graph-Augmented RAG) technology combines graph-based retrieval with multi-agent orchestration to deliver robust, multi-step reasoning and evidence-backed answers from a company’s data and knowledge bases.

Introduction

Enterprise GTM teams increasingly rely on accurate, evidence-backed answers drawn from internal documentation (handbooks, playbooks, product docs, CRM notes). Multi-hop questions — those that require linking facts across several documents and performing intermediate reasoning — remain a major challenge for standard retrieval-plus-generation pipelines.

MAGAR was developed to address this: it augments vector retrieval with a graph representation of knowledge and coordinates multiple specialized agents to produce grounded answers with provenance. MuSiQue is a relevant public benchmark for multi-hop QA; we used it to validate MAGAR’s ability to chain evidence and produce correct answers across documents.

Why MuSiQue?

This dataset is perfect for validating MAGAR because it rigorously probes complex reasoning abilities. Unlike simple Q&A, a MuSiQue question requires the system to:

- Reason Across Multiple Documents: The information needed for the answer is scattered and must be found in different places.

- Integrate Evidence: The system has to perform intermediate reasoning steps and link together semantically diverse facts to form a final, consistent answer.

This need to integrate evidence and maintain sequence directly aligns with MAGAR's core strengths: modeling relationships between information chunks and preserving coherent task sequences via its graph-based retrieval.

Link to Dataset: https://arxiv.org/abs/2108.00573

Evaluation Methodology

To ensure an objective and comprehensive assessment of MAGAR’s performance, we evaluated the system using standard metrics widely adopted in Retrieval-Augmented Generation (RAG) research

Evaluation Metrics:

- Answer Correctness: Measures how factually accurate and complete the generated response is compared to the ground truth, using an LLM-based judge for scoring between 0-1.

- Exact Match (EM): Evaluates whether the ground-truth keywords or phrases appear exactly within the generated text.

- F1 Score: Balances precision and recall to assess both the accuracy and completeness of generated answers.

precision = matched tokens / generated tokens

recall = matched tokens / correct tokens

F1 Score = 2 x (precision x recall) / (precision + recall)

- RAGAS (RAG Assessment Framework): Provides an LLM-based holistic evaluation of retrieval and generation quality, measuring factual accuracy, relevance, and completeness.

Results of Our Tests

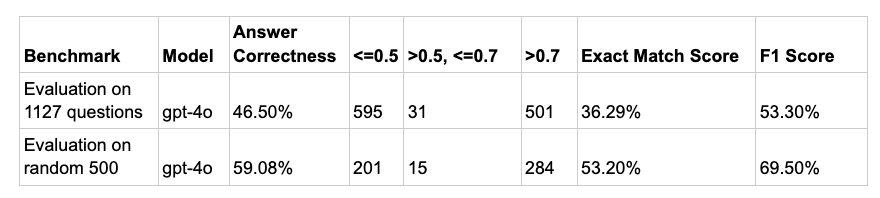

We tested MAGAR against the MuSiQue development set using two scenarios to ensure comprehensive validation and confidence in the results:

- Complete Answerable Dev Set Evaluation: We used the full set of 1,127 questions to comprehensively assess the framework’s performance across the entire range of question types and difficulty levels.

- Random Answerable Subset Evaluation: We also evaluated the system on a random, smaller subset of 500 answerable questions. This tested MAGAR’s robustness and its ability to maintain performance consistency when dealing with diverse question types in a limited, representative sample.

The benchmark results show that our technology achieved an Answer Correctness of 46.50% on the full 1,127-question evaluation, with an Exact Match of 36.29% and an F1 Score of 53.30%. On the random 500-question subset, performance improved to 59.08% Answer Correctness, 53.20% Exact Match, and 69.50% F1 Score, indicating better accuracy and completeness on a smaller evaluation set.

Benchmarking Against Industry and Academic Work

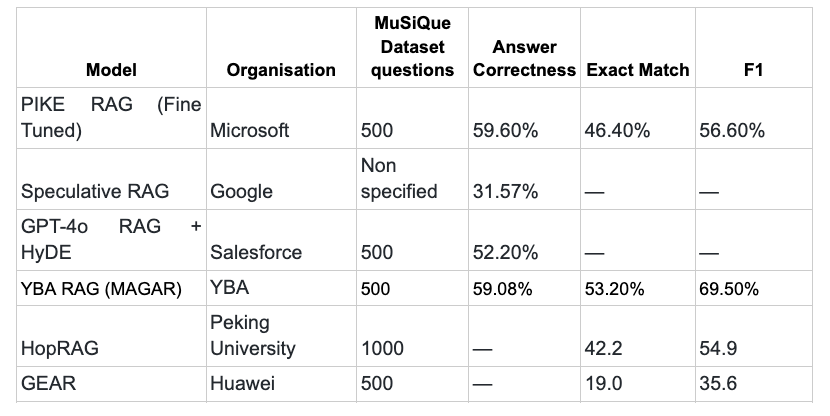

Following are the details of evaluation strategies used by others

Microsoft - PIKE RAG:

- Published metrics on 01-01-2025

- Finetuned-LLM including MuSiQue “train” split.

- Utilize random 500 questions taken from MuSiQue dev set

- Answer correctness =59.60% , EM Score =46.40% , F1 Score = 56.60%

Google - Speculative RAG:

- Published on 11-07-2024.

- An unspecified number of questions has been used for evaluation from MuSiQue dataset.

- Just reported Answer Correctness = 31.57%.

Salesforce - GPT-4o RAG + HyDE:

- Published on 16-12-2024

- Uses 500 randomly selected questions from the dev split of MuSiQue data.

- Reported Answer Correctness 52.20%

Peking University - HopRAG

- Published on 18-02-2025

- Uses 1000 datapoints from the dev set

- EM Score = 53.20%, F1 Score = 69.50%

Huawei - GeAR:

- Published on 24-12-2024

- Uses 500 randomly selected questions

- EM Score = 19.0, F1 Score = 35.6

Following table shows the comparison of our RAG against others

Performance Analysis and Industry Comparison

By comparing YBA RAG (MAGAR) with other retrieval-augmented generation systems, we observe that it achieves one of the highest overall performances on the MuSiQue benchmark. With an answer correctness of 59.08%, YBA’s model performs nearly on par with Microsoft’s fine-tuned PIKE RAG (59.60%), while surpassing Salesforce’s GPT-4o RAG + HyDE (52.20%), Google’s Speculative RAG (31.57%), Peking University’s HopRAG (42.2% EM, 54.9 F1), and Huawei’s GEAR (19% EM, 35.6 F1).Notably, YBA MAGAR achieves the best Exact Match (53.2%) and F1 score (69.5%) among all models, demonstrating superior consistency between retrieved context and generated answers. This indicates that MAGAR’s multi-agent retrieval mechanism effectively enhances answer accuracy and contextual alignment.

Please refer to the chart results comparison shown above.

Note : All benchmarking results are derived from the MuSiQue development dataset and verified using standard RAG evaluation metrics.